Codebase

How many codebases make up a deployable? How many deployable can you build from one codebase?

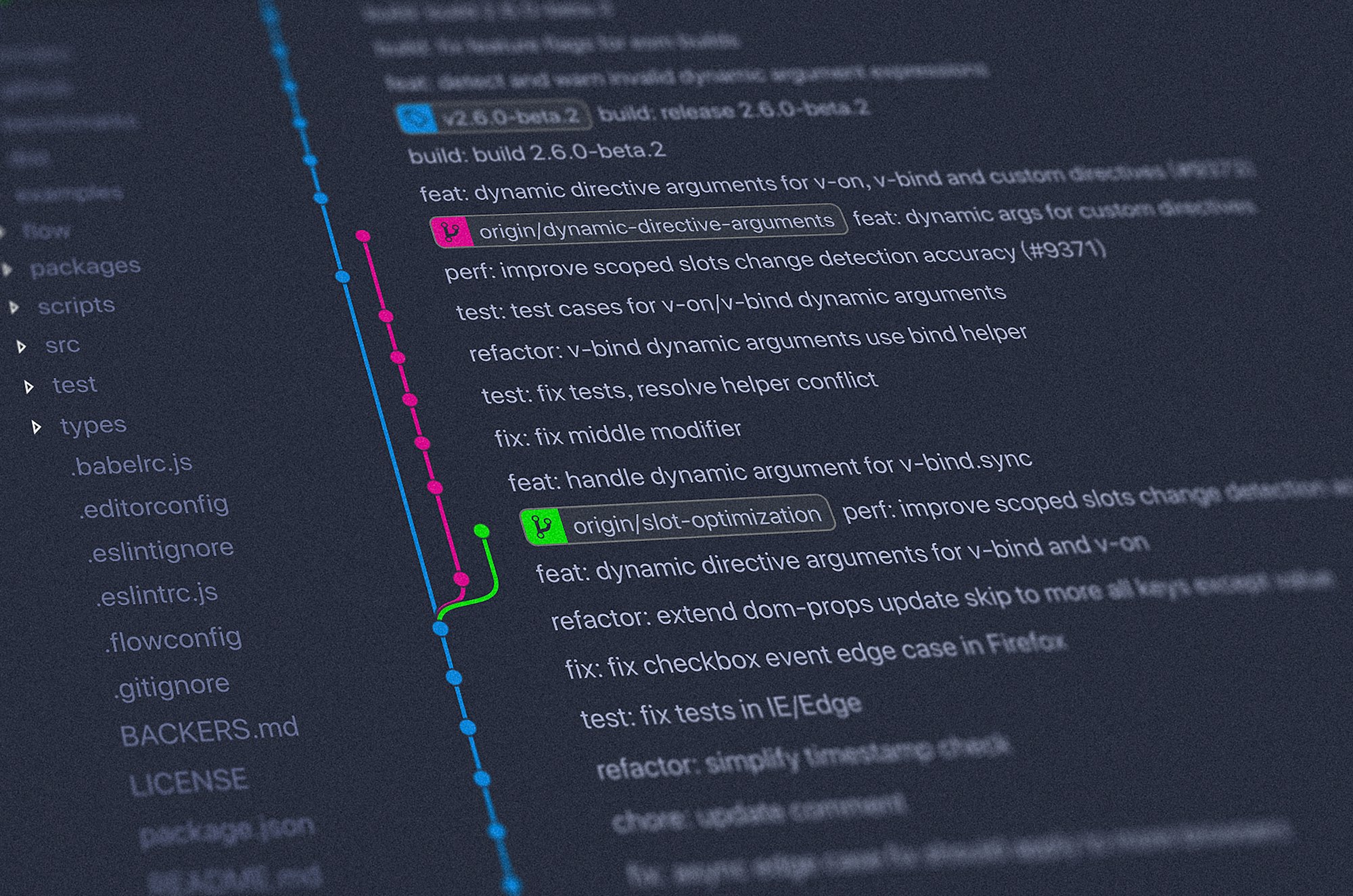

One codebase tracked in revision control, many deploys

Thankfully, the days of applications being developed or maintained without some kind of source code control have - for the most part - passed into legend. Theoretically then, this should be an quick checkbox, nods all around, head to the pub requirement, right? Well, unfortunately not.

What’s the issue then?

First off, there’s a bit of nuance to the requirement as defined by 12F. One of the key points is that each repository should be responsible for containing a single build - either a library, or an application, but only one deliverable output. The legendary single repo at Google would fail, for example, but a surprisingly large number of companies commingle the code for their libraries and final applications.

You may be asking yourself, “Is that really a problem?” Well, so long as people use serious internal discipline to avoid leaking data it might not be, but why put that burden onto your team when by separating the repositories and builds you can enforce it through tooling? If you believe that you don’t have a situation in which your libraries and applications (or multiple applications) are ever out of sync with each other then a) you have a single inefficiently built/delivered application, and b) are probably fooling yourself.

Why does it help?

One of the biggest benefits is that you should never be in a situation where one team can’t do a rollback (either of code or released product) because another unrelated team would be impacted.

This is also one of the key benefits that people talk about when they discuss microservices, even though it has nothing whatsoever to do with deployment. In 12F, each repository can very easily have its own lifecycle, its own testing process, and its own release schedule - whether the repository represents a single sub-component or a full-stack application.

Common challenges

Its not uncommon to build several artifacts from a single codebase. One company I work with has a site-installed and a SaaS product built from the same basic code. While this ”works”, by not separating the repository by artifact (two builds plus a shared library) they create a few problems. First, the deployments of the two deliverables are by necessity tied to one another. Second, there’s no automatic enforcement of code discipline, so its possible to inject business logic into code used only by a single artifact without that being immediately obvious.

You can always right bad code, of course, but structuring your projects to make as many mistakes both difficult and obvious provides some serious benefits - and you can enjoy them for free once you’ve got everything set up correctly.

Breaking multi-artifact codebases into separate systems also highlights any situations in which you have code that seems to be used only to generate one artifact actually being referenced by another one. Especially if you have separate teams, this can be a source of hidden interdependencies. Personally I don’t see this as a risk of moving to separate repositories and deliverables, but a benefit. You have the issue anyway, being forced to deal with it lets you mitigate or plan, as needed, without forgetting about it.

Workarounds

The most common situation that might need a workaround is the case in which you have one codebase generating two separate artifacts. This may include multiple build targets, each potentially with an installer, but sharing the bulk of the code. Luckily there’s an easy fix for this.

- Create a new repository for each generated artifact. You can either start top-down (one per product), bottom-up (one per DLL/JAR/etc), or do it all at once.

- Move the bare minimum distinct code into the new repositories. This could be as small as the installer code.

- Make sure that the original repository saves it artifacts somewhere accessible. This could be a shared drive, a local NuGet server, or similar, but so long as its available it doesn’t really matter.

- Alter the builds in the new repository to reference the shared artifacts.

Is it that easy? Likely not, but its not actually much harder. The important thing is to get it done. By the time that you have a functioning build again, you’ll likely have identified several obvious pieces of code that should be moved into downstream repositories. Keep at it and before long you’ll start seeing the benefits of faster builds and fewer defects!

How can we benefit from this?

Really there are several good reasons to follow this approach. The first is either discipline or clarity: either the fact that two deliverables (libraries, binaries, etc) are intertwined will become painfully apparent, resulting in clarity, or you’ll be able to separate them cleanly resulting in automatically enforced discipline that they stay that way. Each unit will be able to be built faster, and in trendy microservice fashion can choose to set a separate build/test/release schedule based on the needs of the business.

From a business standpoint, separating the codebases also gives you a separate asset. Now, it may not be something that you ever choose to monetize or release separately, but if you ever need to, doing it “right” from day one will not only be far simpler, it will help you through the due-diligence project.

One more angle

If you have a system of any reasonable level of complexity it probably interfaces with external actors. In an ideal world you’ll be using an SDK provided by your integration partner that makes your life easy.

In the real world you’re probably happy if you get a documented API. Chances are that you have a few callouts to the remote system scattered around your code.- this is perfectly normal, but not ideal.

A pattern that we follow fairly religiously is that, whenever we have to interface to a remote system, we create the SDK that we wanted to get from our partner. We may not create it completely, but we’ll build out a small library that deals with the minutia of defining the model and hangling the communication - the idea being that users of the SDK shouldn’t need to know any of those details. You’ve likely already handled these scenarios in your code, we’re just separating them out.

Try to make the SDK as clean as possible without any knowledge of how you’ll be using its functionality. It may seem like a little more work to do it this way but it often ends up reducing the overall time spent working on an integration - and as a bonus, you may even be able to sell it off to your partner (either for money or a combination of goodwill and a reduction in ongoing development costs). Win-win!

Conclusion

No reasonable modern vendor charges by the repository - if your internal IT department does, hold their feet to the fire until they modernize. There’s no good reason other than habit to use a single massive codebase, and all kinds of reasons not to. Happy refactoring!